Gradientspace 2.0

/Gradientspace 1.0 came to a somewhat abrupt end in December 2018, when Tim Sweeney offered me a bag of money to open-source all of my code and join Epic Games. At the time, I had been struggling with trying to build 3D design tools in Unity and planning to switch to Unreal anyway, so the timing was fortuitous. TimS wanted me to come and build 3D Modeling Tools inside Unreal Editor, but I had ulterior motives - I wanted Unreal to be a better platform for building standalone creative tools, like I had been doing in Unity with my 3D printing app Cotangent, VR tools Simplex (for making shapes) and OrthoVR (for making leg prosthetics), and the dental-aligner design app I built for Archform. I figured, worst case, I would get paid to learn Unreal Engine, while also getting to experience what it was like being on the inside of the Fortnite rocketship (it was wild). So, I took the money.

In my first few months at Epic, I built the Interactive Tools Framework (ITF) - essentially a port of my C# framework frame3Sharp - and then began work on what became the GeometryCore module and GeometryProcessing plugin. This geometry infrastructure also started as a port of my C# geometry3Sharp library, but the wizards that joined my UE Geometry team took it so much further. The ITF and Geometry libraries are now central to many features in Unreal Engine. But even more important for me personally was that the ITF and Geometry libraries are usable both for building Tools inside Unreal Editor and also at Runtime inside any Unreal game.

Unfortunately for my personal ambitions, Epic Games Inc was not particularly interested in this Tools-at-Runtime aspect. Unreal is a Game Engine for Games, and getting some of the best game-engine-developers in the world to care about also making it a Real Time Engine for Creative Tools was…challenging. My pitch was always that this was how we were going to build more advanced editing tools into Fortnite Creative, but when Epic pivoted to focusing on UEFN (Unreal Editor for Fortnite), that direction was dead. This is why “how to use the ITF at Runtime” is strictly a thing you can learn about in articles on this website (exhibit A and exhibit B), and you’ll be hard-pressed to find anyone at Epic who will even admit that it’s possible.

As the years passed, I increasingly focused on building out the geometry libraries (which are inherently runtime-capable) while still getting in the occasional bit of framework-y infrastructure stuff, like adding Modeling Mode Extensibility, which I’m now using myself. In 2020 I started looking into Unreal’s end-user-programming/scripting environment Blueprints (BP), and after a few false starts figured out how to expose our geometry libraries in BP, resulting in the now-quite-popular Geometry Scripting. The obvious next step was to do the same for the ITF, which resulted in Scriptable Tools. For me personally, this was the critical point - artists could (finally) create their own customized interactive tools for tasks like 3D sculpting, painting, procedural spawning, and so on, without having to find a C++ developer to help.

By 2022 I was an “Engineering Fellow” - basically the highest level of IC at Epic Games - with a ridiculous salary and the freedom to pursue whatever I wanted to work on. But, what I had gone to Epic to do was, basically, done. Today, inside Unreal Editor, you (the end user) can build powerful creative Tools, whether you are a C++ engineer or a Blueprints-wielding artist. And you can, technically, build creative tools in a built Unreal “game” in the same way. Of course this infrastructure stack could be better in a million ways. But I felt like I had personally done my part in bringing these things into existence, and could hand them off to the stellar teams I was working with. This left me with…kind of…nothing to do? After 5 years in the games industry, I had lots of new ideas percolating, but they were not really aligned with Unreal Engine’s AAA-GDC-Demo culture, or with the Metaverse ambitions (at least, not Epic’s conception of the metaverse…). So, it was time for me to go.

The Gradientspace UE Toolbox, version 0.1

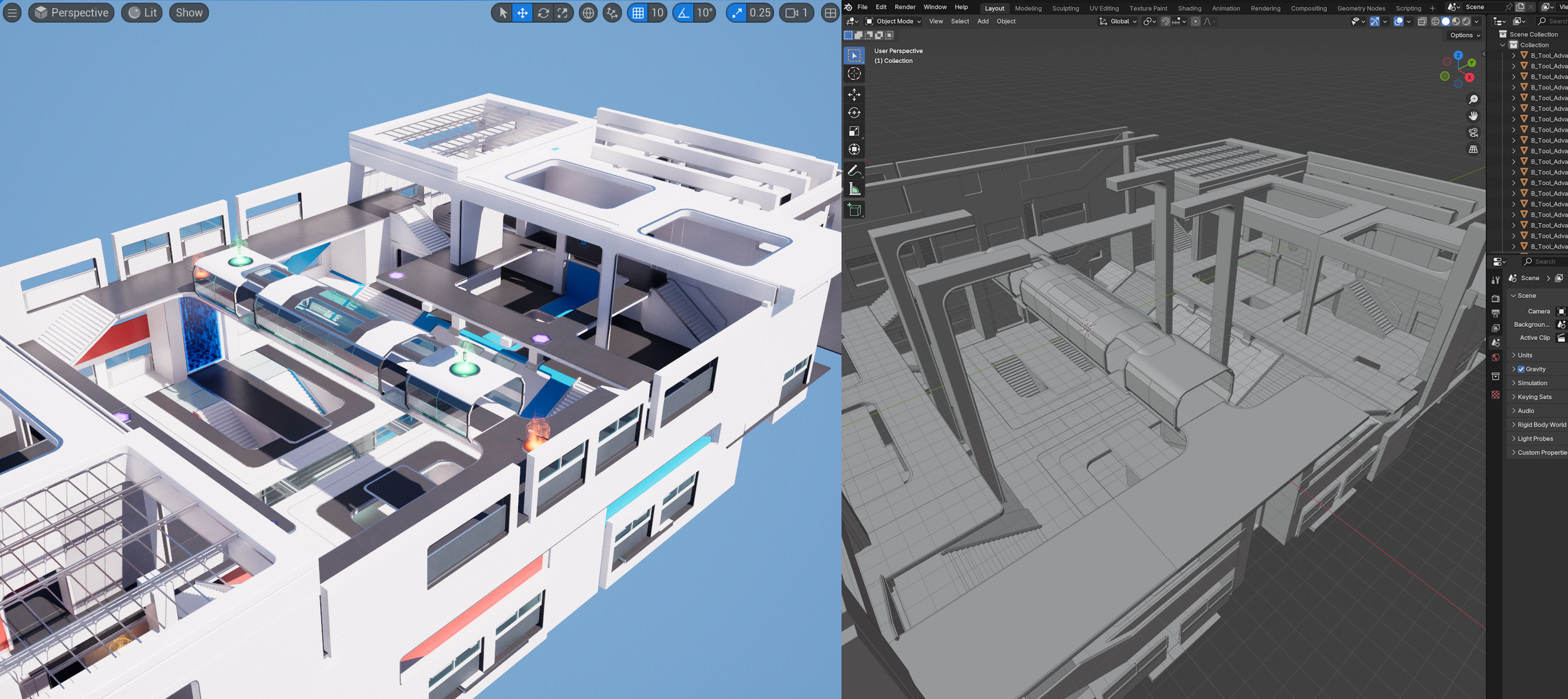

I left Epic in January 2024, and I’ve spent the last 9 months working on various projects that, someday, will hopefully come together into something great. But today I am writing this post because I’m taking the first tiny step - I’m releasing a small plugin for Unreal Engine that provides a set of features that I think will be helpful to many UE users. The plugin is exposed as a Modeling Mode Extension for now, so in Modeling Mode you will get a Gradientspace tab with my set of Tools, which work the same way as many existing Modeling Mode Tools (shouldn’t be unexpected, since I designed most of them!). Many of the Gradientspace Tools are focused on a new type of 3D modeling object called a ModelGrid, which is kinda like a live-editable voxel-grid where each cell can contain parametric shapes. It’s pretty cool and I’ll have a lot more to say about that below. There are also some Mesh Import/Export tools that can help you get polygonal meshes in and out of UE without losing the polygons (zomg polygons!!?!), a QuickMove Tool that implements a bunch of fast 3D transformation interactions, and a grab bag of optional Editor UI tweaks, like being able to add Mode buttons back to the main Toolbar.

You can download a precompiled version of the plugin here on the UE Toolbox page, for binary (launcher) 5.23, 5.24, and 5.25 engine installations. You can kinda hack it to work with a source build, too - check the Installation Guide page. I’m not releasing the plugin source yet, I wrote about that in the UE Toolbox FAQ. I’d love to hear your thoughts. You can get in touch on BlueSky, Mastodon, Twitter, or join the Gradientspace Discord to complain in real-time (I actually mean that…I seem to be complaint-operated, and will appreciate your contributions!).

One of my biggest issues building Modeling Mode at Epic was the pace of Engine releases. I wanted to be shipping Modeling Mode updates once a month. As an independent developer, now I can! So expect to see frequent updates, both bug fixes and new features (don’t worry, the plugin will let you know).

ModelGrids

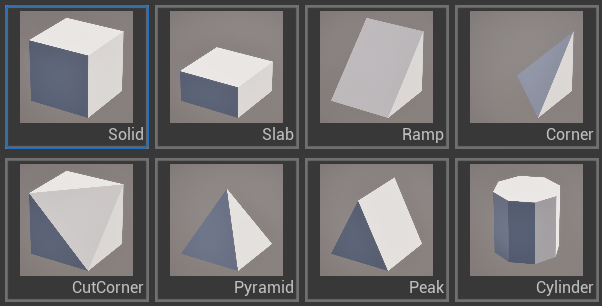

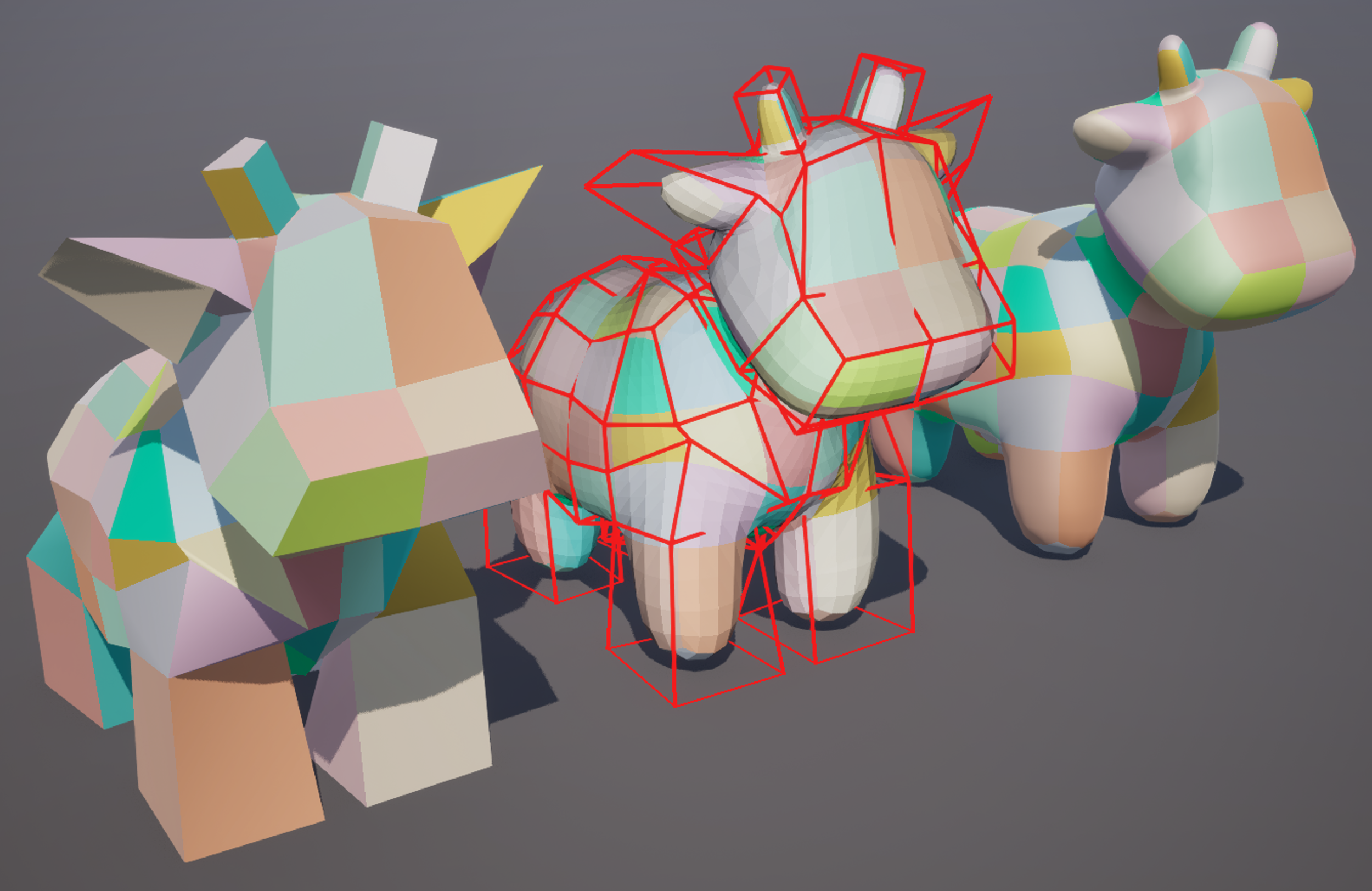

I just mentioned ModelGrids, and ModelGrid-related tools make up the bulk of the initial version of the UE Toolbox plugin, so what are they, exactly? Well, ModelGrids are a way to represent 3D shapes that lie somewhere between voxelized representations (ie think Minecraft) and grid-based continuous representations like SDFs. Closer to the former than the latter. The basic idea is to (1) divide space up into a uniform grid of little cuboid cells/blocks, and then (2) allow a subset of these cells to be filled with simple geometric shapes. In a voxelized representation, you get to choose from two options - filled box, or empty. In a ModelGrid, you have a slightly larger shape library (8 for now, shown below), and most of those shapes are parameterized, in that you can geometrically transform them in various ways within their containing cell (example shown below-right)

So geometrically, ModelGrids support a much larger class of shapes than Voxels. However the parameterization of each cell is heavily discretized - if you look close, you will notice that when I am resizing and translating above-right, the shape is making clear “jumps”. This is because each linear-dimensional parameter (ie X/Y/Z scales and translations) only supports 16 steps. This makes it easy to “eyeball” exact sizes without resorting to typing numbers (and, critically, these discretized parameters can be packed into just a few bits each).

In terms of color and material, each cell can have a unique RGB color (full 8-bits per channel here), as well as be assigned a UE Material (the cell colors will come through as Vertex Color in the Material). Per-face colors are also supported (but not per-face Material, for now).

The ModelGrid itself is an abstract data type. In terms of “UE things”, I have a UModelGrid that is analogous to a UDynamicMesh, which can either be stored in a UModelGridAsset, or directly inside a UModelGridComponent, which in turn can live inside a UModelGridActor. The Component supports overriding it’s internal stored ModelGrid with a ModelGrid Asset. So a UModelGridActor/Component can store a ModelGrid “in the level” (like DynamicMeshComponent) or reference an Asset (like StaticMeshComponent).

In either case, the ModelGridComponent does not have a visual representation directly - the ModelGridActor will dynamically spawn a suitable MeshComponent if needed. Currently UDynamicMeshComponent is used for this, however a more efficient custom MeshComponent is in-progress.

The reason for this separation is that I think a ModelGrid will be useful as an approximate representation for complex assemblies of scene geometry - ie, a type of higher-level ‘collision shape’ or gameplay volume which can easily be created, and even dynamically generated or modified in-game. There are also potential use cases for dynamically-generated LODs / HLODs that I would like to explore.

I have built out quite a bit of tooling around ModelGrids already. Voxel objects created in the tool Magica Voxel can be imported as ModelGrids, and images can also be imported and converted to ModelGrids in various ways. Please get in touch if you have a voxel format you would like to see supported! 3D Meshes can also be voxelized as ModelGrids, currently this is limited to Solid cells but an algorithm that can solve for closer approximations using the full shape library is in-progress. This conversion can transfer vertex colors, including on the interior of the volume.

The ModelGrid Editor tool is the main show, where many different editing interactions are available, from single-cell clicking to 3D volumetric drawing, layer-based add and remove, flood-filling, and so on. Selection and parameter editing with both in-viewport 3D gizmos and a custom property panel are available, and it’s possible to do things like draw the grid “on” the surface of a mesh in the level. I expect this Tool to expand quite a bit over coming releases, based on user feedback.

Helpful utility tools for things like cropping a grid, modifying the origin/pivot cell, and managing the Component/Asset grid are available. And finally, a ModelGrid can be converted to Static or Dynamic Meshes using various strategies which can (eg) resolve and remove hidden faces, simplify the triangulation while taking color/material into account, and compute output polygroups in various ways. One useful workflow here is to use ModelGrids to quickly block out initial quad-topology for SubD modelling.

Mesh Import & Export

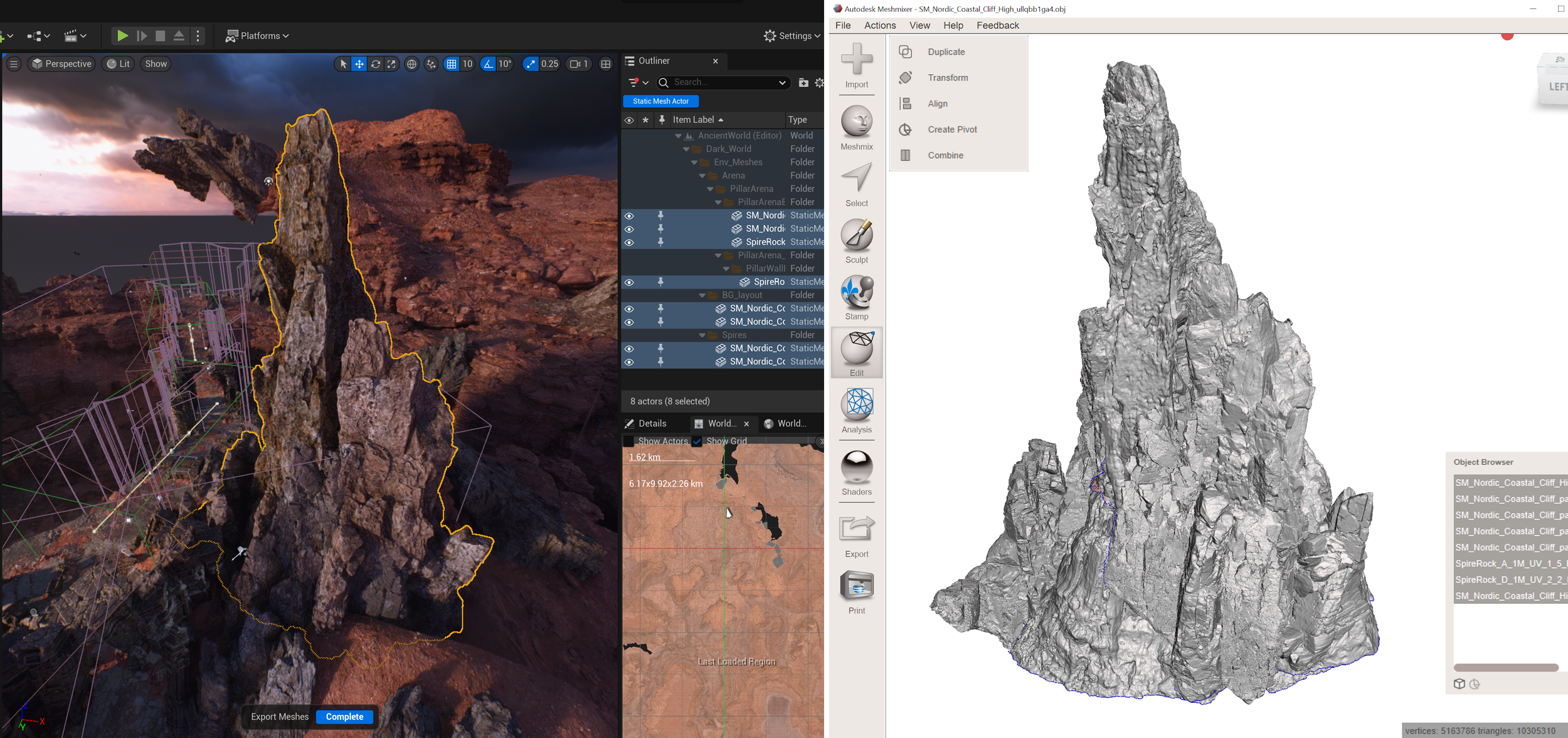

Once you’ve made a cool ModelGrid, converted to a mesh, done some SubD, and then sculpted a bit, you might want to export your resulting art. To support exporting the actual polygons (defined as polygroups), the Toolbox includes a custom Mesh Export tool. This currently only supports exporting OBJ-format meshes, but FBX support is a work in progress. The Mesh Export tool can also export a set of selected Actors in the level, while maintaining their world-space positions.

A Mesh Import tool is also available, and just like the Export, it can preserve imported quads/polys as polygroups on Unreal triangle meshes. The Import Tool can import a mesh as a DynamicMesh directly into the level, or a Volume, as well as creating a StaticMeshActor and Asset. Again, only OBJ is currently supported, so this isn’t a replacement for bringing in all your UE assets. However if you, say, want to bring in a high-res scan or sculpt to bake a normal map for a lower-poly asset, you can do that as a DynamicMesh and save yourself a lot of time. And combined with the Transfer tool in Modeling Mode, you can bring in an polymesh version of an FBX asset as OBJ and transfer the quad-polygroups into the “real” asset.

QuickMove

The last tool in the 0.1 Toolbox is the QuickMove Tool, which provides a suite of fast-but-constrained ways to translate and rotate 3D objects in the level. This is intended to be an alternative to using the 3D gizmo - you can simply click and drag objects, no need to select first or acquire tiny gizmo handles. There are ample hotkeys to jump between the various different transform modes, which include some powerful context-sensitive options like a “point” that can rotate a clicked object location to face another point in the scene, “attach to camera” that is similar to how positioning works in UEFN, and the option to have a translating object collide with selected scene geometry. In addition, the Tab hotkey is (optionally) registered as a shortcut for this Tool, so you can quickly jump to it.

Editor Quality-of-Life Improvements

You might be on the fence about whether or not any of these Tools are going to help your workflows that much. And installing a Plugin and keeping it updated is inevitably a bit of a hassle. So to sweeten the pot, I spend a bit of my time on implementing “Editor UI hacks” that I frequently see requested by UE users.

In this initial release, I have made it possible to configure a startup Editor Mode in the Gradientspace UE Toolbox Project Settings. So, if you are like me and spend most of your time in Modeling Mode, now you don’t have to switch back every time you open a Level.

I’ve also added support for adding dedicated Editor Mode buttons back to the main toolbar, next to the Modes drop-down. This even supports custom EdModes! And there is a toggle to hide the drop-down, if you want to go all the way back to the early UE5.0 UX.

I’m looking for ideas for other Editor Quality-of-Life features that I can try to implement, so please feel free to connect if you have a suggestion.

Looking forward…

One of the most disappointing aspects of all the technology I helped to bring into existence over the past half-decade is that it is largely trapped inside Unreal Engine and Editor. Now don’t get me wrong, UE is a great platform. However, it also requires 100’s of gigabytes of disk space, at least 64 GB of RAM, and a high-end CPU and GPU - at Epic my workstation cost over $20,000! UE Editor has a wildly complex user interface, to the point where it’s kinda impossible to build “good UX” for new tools because you are trapped inside a legacy UX nightmare. The barriers to even dipping a toe into UE are extremely high in terms of both financial and time investment. Getting data in and out is also a real challenge, and so nobody seriously uses UE as part of a DCC-like workflow, like you might use Blender or Maya.

So, naturally, I decided to build my new creative tools as an Unreal Editor Plugin, where I get to inherit all those constraints in addition to the “standard” indie-developer problems of trying to get anyone to even know about my stuff, let alone try it. Totally makes sense!

The reason I chose this route goes back to why I joined Epic in the first place, which is that I see Unreal Engine as a great platform for shipping creative tools. Because of the work that I did there, I know it’s possible to build in a way that works both inside Unreal Editor and in standalone applications (where you won’t need a supercomputer). Building inside Unreal Editor first allows me to focus on some problems and defer others, and my work will still be useful from day one. I get to prototype and iterate with real users while I develop the low-level tech and try to sort out the high-level interaction paradigms. And at some point I can (metaphorically - after a lot of coding) flip a switch and release the same tools and workflows as part of a standalone application, that works on any platform a UE game can ship on.

I also have an eye on other engine platforms, so I am being careful to draw a hard line between code that should and shouldn’t be dependent on UE. For example my ModelGrid representation is implemented in fully standalone C++ libraries, that could be integrated into any engine or tool. I don’t rely on UE serialization, so it’s trivial to move a ModelGrid between UE and, say, a command-line tool that runs as part of a distributed procedural-content-generation pipeline. And ultimately, any binary data formats I introduce will have a text-based serialization option and an open-source parser, so you will never be locked out of your own data.

Looking at this feature set for the initial release, it might seem kinda random - why Mesh Import and Export? Why this weird no-gizmo transform tool? Well, things like mesh import and export are not built-in features of Unreal Engine’s Runtime. To give my kids a ModelGrid editor on an iPad that can be used to make content for Roblox, I’ll need that import and export functionality. So if I want to ship outside the Editor, I’ll need my own implementations of that kind of stuff.

And what about on a console? A tricky thing about a console is that there isn’t a mouse. UE can provide a 3D Translate-Rotate-Scale (TRS) Gizmo at runtime via the ITF, but it’s useless with a gamepad, and not great with touch input, either. This has been a blocker for many creative tools on consoles and tablets. We need a system for transforming objects that doesn’t rely on tiny gizmo handles…like perhaps by having various mappings from analog-stick input to 3D movement, that you can quickly switch between using button combinations…hmmm….

Ultimately, although simultaneously shipping tools in-editor and at-runtime would be possible today, it wouldn’t be easy. So what I would like to end up with is infrastructure that lives on top of - or outright replaces - the ITF and Editor-isms like details panels and PDI rendering, which would allow anyone to easily create desktop creative-tool applications using Unreal Engine. I’ve spent enough time wrapping my head around the problem, now it’s time to do it.

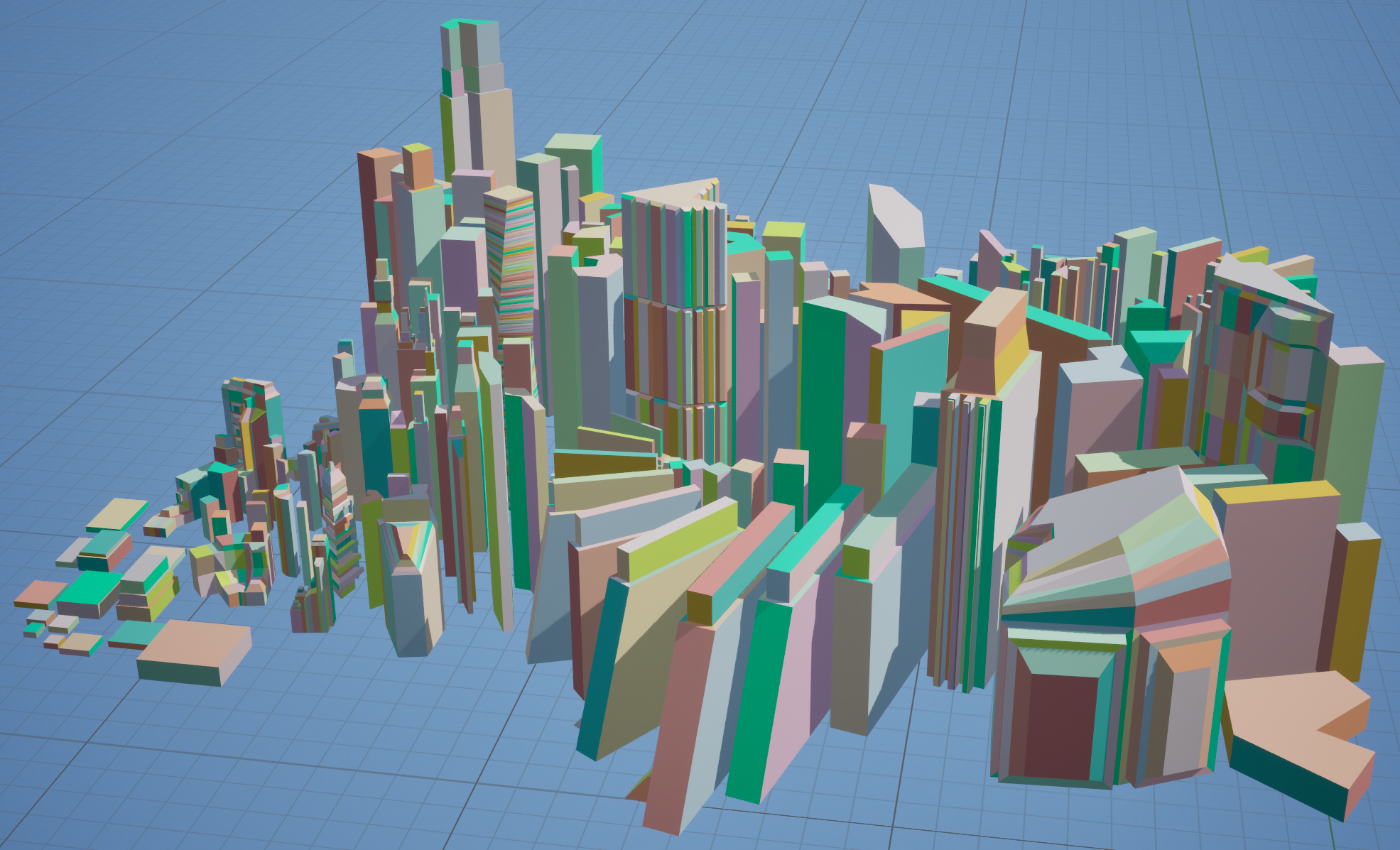

From ModelGrids to WorldGrids

A single ModelGrid object scales out to 128 blocks in any direction, which - if the blocks are a decent size - covers quite a bit of ground. You could definitely build a little village or castle as a ModelGrid, at which point you might want to walk around inside it. And you could certainly tile a bunch of adjacent ModelGrids to create out a larger world. At some point it starts to feel like a kind of homebrew landscape/environment system…and so I figured I might as well just work on that too…

This isn’t something I intend to ship soon, but I have a functional prototype of a scalable environment built out of tiled ModelGrids, where everything you can do in the UE Toolbox’s Grid Editor can be supported. Basically the thinking here is to allow creators to build ModelGrid objects that can be placed in ModelGrid worlds, like a stamp or template (or “prefab”, if you will). Things like being able to modify the environment at runtime, with automatic collision generation, are basically a given in this kind of system. If you squint, it nearly has the makings of a sandbox game already…

One thing that I find very frustrating about many “Creative Modes” you find in games is that they rarely provide any higher-level tools or workflows, that would let people really leverage their often-quite-powerful world-building technology without having to place every element one-by-one. Even basic creative essentials like undo are rare to see. What I always want is to be able to pop out of “first person” mode into an “edit mode” that can provide a much fuller creative-tool experience. This is what I had hoped the Runtime-ITF would have been able to do for things like Fortnite Creative. But it seems like I’m going to have to build it myself.

The video above shows a short demo of a procedurally-generated live-editable ModelGrid-based world, where I will place a few blocks in third-person view and then jump out to “edit mode” where I can have proper 3D camera controls and freely edit the world (with undo!). The Edit Mode UI in the video is just a quick bit of UMG, but once I have a desktop-app version of the plugin’s ModelGrid Editor Tool, it will slot right in. I’ve also got some other things cooking, like animated ModelGrid objects - see below-left for an example where I made a little animated pixel-art importer.

a neat little pixel art animation my kid made!

That Pixel art imported as a multi-Frame Modelgrid animation

A modelgrid can be meshed, and that mesh can be processed. In this example I applied constrained mesh smoothing, to produce something more like a landscape.

And finally, although a ModelGrid has it’s own visual aesthetic, you might be thinking about things that are less chunky, like say a large-scale terrain. A ModelGrid can be converted to a continuous surface mesh, at which point it can be the starting point for a procedural generator. above-right is an example where I did just a tiny bit of basic remeshing and smoothing, to get something closer to traditional UE landscape (except you might notice a few overhangs…). But the grid is still there, just hidden - so it’s not implausible that, if you wanted to edit the terrain live in-game, you could still do it via the underlying ModelGrid. And I think that maintaining this underlying spatial organization structure - even if it’s a relatively coarse volumetric representation of a “smooth” landscape - can address a lot of “game problems” like LOD, Culling, Physics, Pathfinding, other Gameplay systems, and Simulations….it’s a long list.

I’ll end it here with a pointer to the Gradientspace Discord - if you are using the UE Toolbox, this is the best place to come and ask for help. I’ll be there, and happy to also chat about things like the Interactive Tools Framework, Geometry Scripting, UE Modeling Mode, and other related topics that I know a thing or two about. Thanks for reading!!

-rms80